The Problem We're Avoiding with AI

We, as a species, have been thrown into exceptionally dangerous circumstances with the new breed of AI. I’ve been waiting to write this particular email on this particular topic, because I found myself waffling between opinions and false understanding.

I’ve been examining AI from philosophical, technical, political, and even science-fictional standpoints. I don’t have the answers yet—no one really does. But I have stumbled upon some concerning and critical topics that aren’t prevalent in the AI discussion—at least, not in the general population.

AI has been something I’ve been comfortable with, something I’ve sought after, and something I’ve advocated for up until recently. To set the stage, let’s quickly discuss what AI actually is and what the real concerns are that we should be taking seriously.

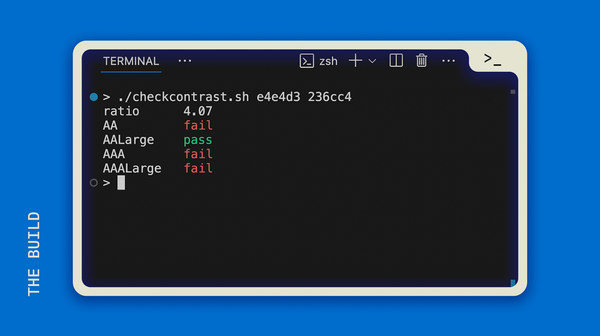

Statistics

AI is not actual intelligence, it is artificial intelligence. It is based in statistics. It predicts what will best accomplish its task, whether that’s responding to your question or creating imagery from your prompts.

This is absolutely fundamental to understand before we dive into the ramifications of AI.

It is certainly not sentient. We can give space for that possibility in the future, but that argument is a clever distraction.

HADD

Humans have a bias. It’s built-in and it’s exceptionally powerful and persuasive to us. Our brains are a Hyperactive Agency Detection Device (HADD). There are theories that our human inclination to connect events that happen around us to supernatural beings is largely because of HADD.

We hear a twig snap in the forest near us, but we know we’re alone—was it a spirit? Was it evil? A monster?

Haunted houses or spaces where “things happen” are surely evidence for ghosts.

Calamities fall upon some city or (even worse) some ethnic group and we say, “Surely, it is God’s punishment for their wickedness.”

All of this is exasperated because of our biological wiring. We can’t help but personify the inanimate, breathe life into containers where there is none, project our feelings and thoughts onto creatures or even people that do not share those same feelings and thoughts.

We see a response from ChatGPT and it feels like it is talking to us—like it’s human. It’s using our language—and I don’t just mean it’s using “English” or “Spanish,” it uses phrases that express emotions, “Certainly! I can help you with that…” or “I’m sorry for the confusion…” or “You’re right…”

This will continue to feel more and more like you’re interacting with a being. Something with feelings and intelligence and maybe even desires.

Remember, this is just a prediction model. What is the most likely, best response to the prompt given? That’s AI.

Not all of this is HADD theory, but anecdotal experience tells me that we, humans, do a very good job at distracting ourselves from real, root problems because it’s so easy to dehumanize actual people and humanize non-humans.

I’m not arguing this HADD-bias is always bad, but without awareness, we can’t move forward.

The Real Problem with AI

People are always—have always been—the real problem with AI.

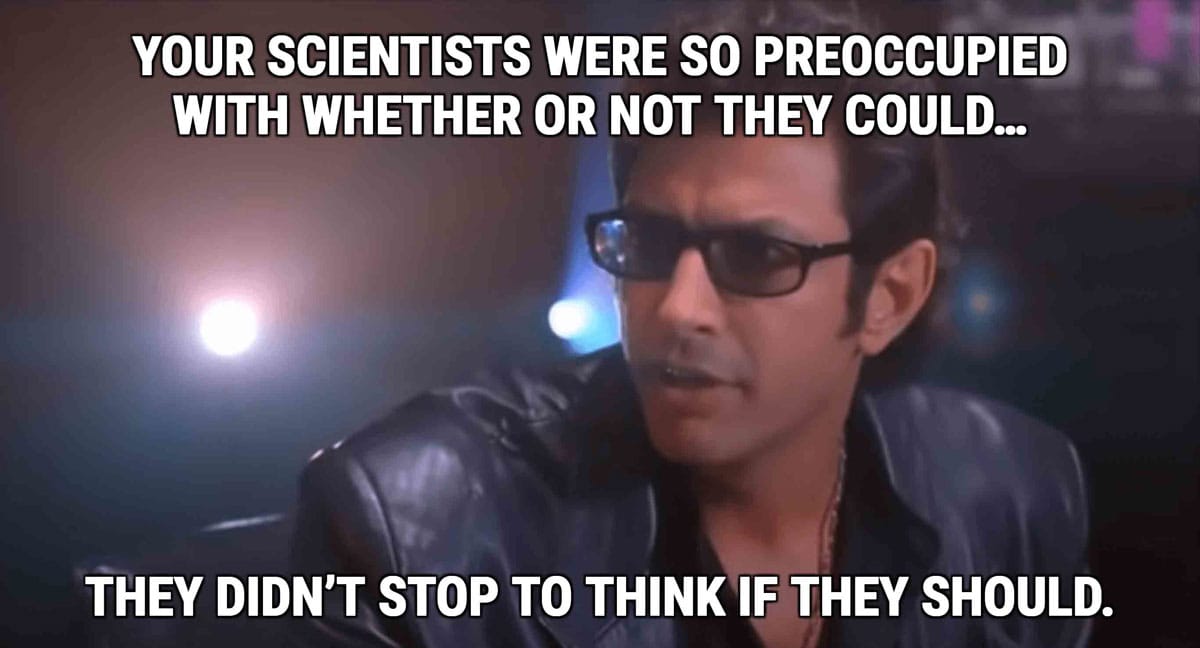

We are playing with the next nukes. And we’re doing so without responsibility, without wisdom, without restraint, and without any real safety measures.

Tristan Harris puts it perfectly: “You can’t have the power of gods without the love, prudence, and wisdom of gods.” (He may have been referencing Daniel Schmachtenberger, I’m not sure who’s the original source.)

Love

How is it that we as humans have immense capacity to love; have religions whose underlying basis is love; have an inclination to love and still end up drawing borders, starting wars, greedily exploiting, creating “others” or “monsters” of groups of people?

How do we truly instill “love for each human” as a vital, indispensable core value in our societies?

Without that kind of love, AI will be used to destroy what we’ve built and cause suffering at a scale we have not yet seen.

Prudence

Caution. In what world do business leaders choose caution over profits? Probably the world that survives.

The problem is that we created a race, and when a race is started, no one is incentivized to stop racing. At best, we have the fallacious attitude, “Better us than them.” Better we create it first, ‘cause the other guy is not going to be as safe, smart, careful as we are.

In a traditional system, a young person who has tapped into some massive amount of power would have an elder come by and cut them down a bit: “Slow down, you’re not ready, you need to wait.”

We have no elders in the AI space. No one tempering the possibility with patience, caution, respect for the danger. Instead, the environment of tech companies is: “Move, ship, go.” Get it done yesterday and always be first to market. [Josh Schrei]

Policies and regulation might be able to help here, but self-restraint and big-picture thinking will become critical skills for everyone to develop and encourage. It’s no longer acceptable to run, unchecked, unaccountable in the tech space.

Wisdom

This needs to become the pursuit before developing AI further. Before we unleash irrevocable power to anyone without wisdom. Again, I’ll pull what Tristan Harris has said: “If 99% of people want good for the world and 1% doesn’t, it’s still a broken world.”

The problem with wisdom is the same it has always been: it’s hard.

When faced with a path full of hardship, self-sacrifice, obstacles, delayed rewards and a path that is easy, clear, free, and instantly gratifying, it’s all too easy to choose that path of least resistance. Especially when you have not developed the wisdom to pursue wisdom. And we’re back in the spiral.

What do we do?

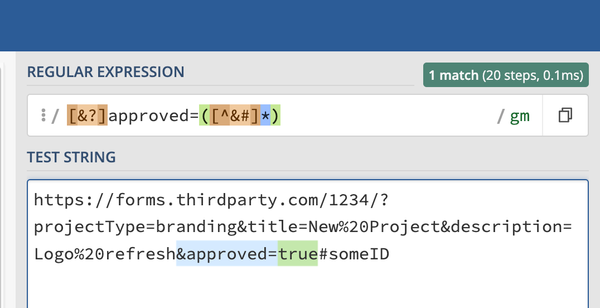

Taking AI seriously is the first step. It’s easy to pass off AI as a silly gimmick or something that “is coming for our jobs.” AI unlocks doors we thought were securely closed—thinking of past topics we’ve discussed here, like content-based verification: your voice, your likeness in photo or video, details about your life.

Remember that we’re not interacting with a being. We cannot allow young people to develop relationships with AI. They must know that it is an illusion, it cannot be fully trusted to provide safe, wise, or accurate answers or advice.

Josh Comeau put it well that AI sounds 100% confident, but is only 80% accurate—making it hard for us to not believe it. When AI appears so smart, so confident, how could we possibly be able to discern its errors?

We need to place pressure on government to create regulations, to fight against unchecked powers with deep pockets.

I highly recommend listening to the podcast, “Your Undivided Attention.” It started with the “social dilemma,” taking on the dangers of social media and the addictive nature of what we’ve built. As of 2023, they’ve expanded the discussion to the “AI dilemma” and provide lots of important insights into dangers and potential solutions.